Luigi success

So Luigi, our open sourced workflow engine in Python, just recently passed 1,000 stars on Github, then shortly after passed mrjob as (I think) the most popular Python package to do Hadoop stuff. This is exciting!

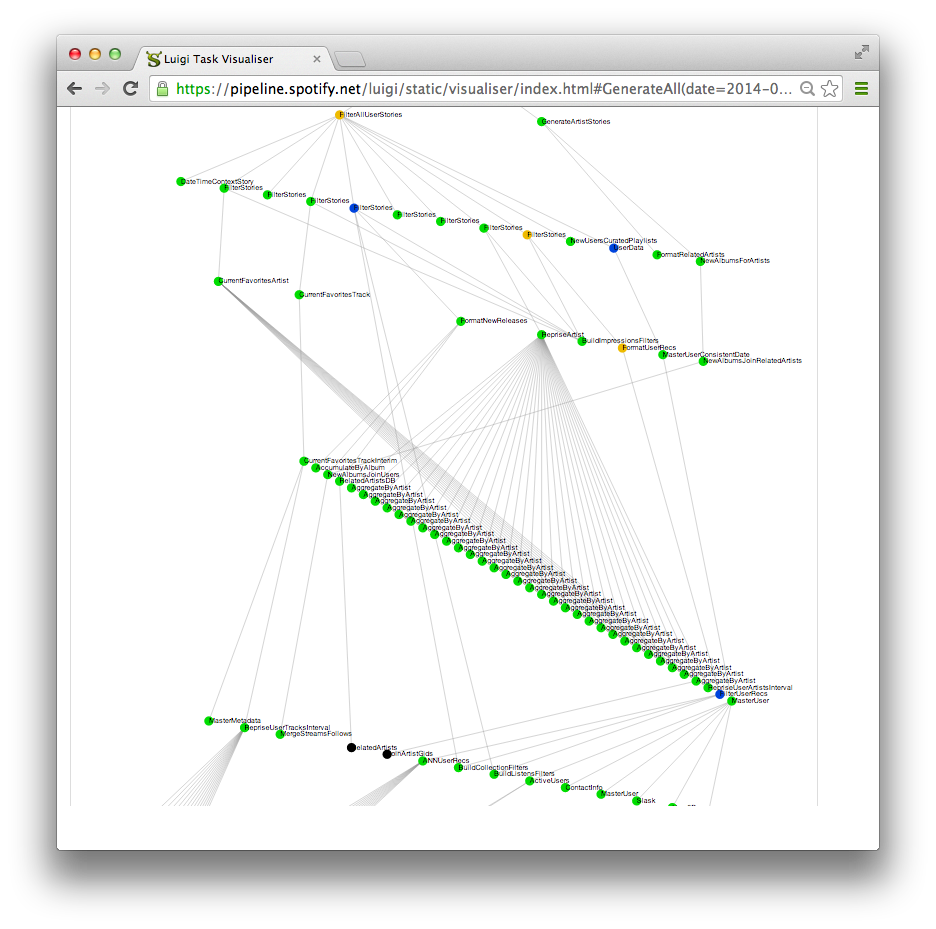

A fun anecdote from last week: we accidentally deleted roughly 10TB of data on HDFS, and the output of 1,000s of jobs. This could have been a disaster, but luckily most of the data was intermediate, and luckily everything we do is powered by Luigi meaning it’s encoded as a big huge dependency graph in Python. Some of it is Hadoop jobs, some of it inserts data in Cassandra, some of it trains machine learning models, and much more. The Hadoop jobs are a happy mixture between inline Python jobs and jobs using Scalding.

So anyway, Luigi happily picked up that a bunch of data was missing, traversed the dependency graph backwards, and scheduled everything it needed. A few hours (and a heavly loaded cluster) later, everything was recreated.